this the second part of dockerizing an angular 6 and spring boot app

Part 1 here: http://dev.basharallabadi.com/2018/08/part-1-backend-docker-for-angular.html

I followed this blog:

https://mherman.org/blog/2018/02/26/dockerizing-an-angular-app/

so basically three steps:

1- create docker file

2- create docker compose

3- docker-compose build .. docker-compose up

here is the files I'll be explaining to achive this:

.

1- Dockerfile

This docker file uses multi stage build, which means it uses multiple docker images to produce final image.

why?

because the image we need to build the angular app requires alot of dependencies that are not needed to run the app.

in the first part of the docker file:

- it starts based on a node image

- prepares the app directory

- copies package.json from the source code directory to the docker container directory

- executes npm install which will install all node dependencies.

- install angular cli globally (version 6)

- copy everything from the root directory to the 'app' directory we created before

- execute ng build to get the compiled app code that will be served by a web server

note that for this step I'm specifying the build environment, which is passed as an arg to the dockerfile

this command used to be : ng build --env=$build_env .. but in angular 6 got changed.

The reason we build the app is because the ng serve is good for local development use only.

it's not good and it didn't seem to work to accept remote requests over the network

and in my case I wanted to use docker to make it faster to deploy the app on different environments not to develop there so I went with the choice to build the app.

The reason I pass the environment is to specify for angular which environment.ts file to be used because I wanted to be able to debug this build if needed, and not have production build where it's harder to debug.

2nd part / stage :

-we start off an official nginx image

-copy the output from first image build to the html directory of nginx container (the path for the html directory is provided by the image itself)

- copy our custom nginx configs to overwrite default configs

[ the reason for this is to proxy our api calls from angular through nginx to the boot REST api container to avoid allowing CORS (Cross Origin Requests).. note that I used the IP of the instance to get the requests to the boot container on port 8080 ]

- expose port 8008 from this container to the outer world network

- start nginx

Things worth noting:

* while building the angular app it was throwing disk out of space error.. this is due to the huge size of the node_modules directory, and because docker doesn't clean up images between part 1 & 2

so to avoid any issues make sure to clean up the unused docker artifacts before/after the image build:

- docker system prune -a -f

- docker volume ls -qf dangling=true | xargs -r docker volume rm;

Saturday, August 25, 2018

Monday, August 20, 2018

PART 1 [Backend] - Docker for Angular + Spring Boot

I have been working on an angular 4 (upgraded to 6) application backed by spring boot rest api, repositories:

I have been working on an angular 4 (upgraded to 6) application backed by spring boot rest api, repositories:1- api: https://github.com/blabadi/nutracker-api

2- angular: https://github.com/blabadi/nutritionTracker

and this post is about using and adding docker to these apps to make it easier to deploy and share.

Disclaimer: this is not by any means a best practice article, but was more of a hands on way to learn more about Docker work flow and challenges that one may face.

Part 1: Dockerizing the backend project (nutracker-api)

I was testing this process on amazon micro instance and these are the steps I followed:pre requisite:

- install docker on aws:

https://docs.aws.amazon.com/AmazonECS/latest/developerguide/docker-basics.html

1- add docker file (point to a built jar for now)

reference: https://www.callicoder.com/spring-boot-docker-example/this docker file is simple

it:

- gets a jdk image

- copies our pre built jar from the build output directory

- starts the jar (which is a spring boot jar that starts an embedded web server)

to understand the details of the docker file contents, see:

reference: https://spring.io/guides/gs/spring-boot-docker/

2- install docker compose

https://magescale.com/installing-docker-docker-compose-on-aws-linux-ami/Docker compose helps in building and running multiple containers in one command

so in my case I needed to run my spring boot jar and have it connected to a mongo db instance (also hosted in docker container)

3- add docker-compose to include mongo db

https://medium.com/statuscode/dockerising-a-node-js-and-mongodb-app-d22047e2806fthis in the following order :

1- prepares and runs a container for mongo by using the mongo image

2- mount a real path to the container path to persist the db data.

3- builds a container from a Dockerfile located in the same directory.

4- expose this api on container port: 8080

note that we pass the mongo container name as environment variable to the api container, which uses that variable to configure the mongo client (MongoConfig.java)

you may find people adding a 'link' key to the docker compose (or when they run the container).

http://www.littlebigextra.com/how-to-connect-to-spring-boot-rest-service-to-mongo-db-in-docker/

but that is an older way to do it. in my example I'm utilizing the docker network created by default when you run a compose file and in that case you can connect to containers by their name out of the box

https://dev-pages.info/how-to-run-spring-boot-and-mongodb-in-docker-container/

so in summary for the backend project I did:

1- create docker file to run the spring boot based on a jdk image2- create a docker compose to spin a mongo container and run the Dockerfile from step 1

3- run the commands in following sequence:

a) build the jar (the -x to not execute): ./gradlew build -x test

b) docker-compose build, builds the images

c) docker-compose up, runs the containers

the three steps above is what you do in case you change your code and want to re/deploy it somewhere.seems straight forward but i faced few issues to boil down to these and learn my mistakes.

Notes/hints related to this part:

- to stop all docker containers running: docker stop $(docker ps -a -q) - docker-compose up :

doesn't rebuild the containers (in case you change code or Dockerfile), if you change something in the jar, it won't update automatically

- docker-compose build :

rebuilds our java app image (nutracker-api) boot application

- to access the api from browser/rest client remotely I had to use :

expose:

- <port>

in docker-compose.using:

ports:

- port1:port2

was not enough to publicly access the docker containers but can be enough for local communications of containers on same docker network.- There is a gradle & maven plugin to automate the docker image build & push to docker hub as part of your build steps which is more practical solution.

and saves you from having to build the code at the host machine as I had to do. i just opted-out from doing it to keep things simple

https://www.callicoder.com/spring-boot-docker-example/#automating-the-docker-image-creation-and-publishing-using-dockerfile-maven-plugin

Part 2: Dockerizing the Angular app will follow in the next blog post

Monday, August 6, 2018

Setting up TensorFlow backed Keras with GPU in Anaconda - Windows

I wanted to use my GPU instead of CPU in Keras, after knowing that it supports CUDA, for a simple deep learning example training, so I had to do some search on how to set it up and ended with this summary:

Pre requisites :

1- Anaconda installed

2- setup Nividia CUDA 9.0 https://developer.nvidia.com/cuda-90-download-archive

3- setup Nividia cuDNN 7 (for CUDA 9.0) https://developer.nvidia.com/rdp/cudnn-download

https://docs.nvidia.com/deeplearning/sdk/cudnn-install/index.html#install-windows

Steps:

0) run Anaconda Prompt

1) install conda packages

conda create --name tf-gpu-keras-p35 python=3.5 pip

activate tf-gpu-keras-p35

conda install jupyter

conda install scipy

conda install scikit-learn

conda install pandas

pip install tensorflow-gpu

pip install keras

The following didn't work for me:

(didn't work with keras, keras installed tensorflow 1.1.0) keras keeps overriding the tensorflow-gpu

you get empty tensorflow module

conda install -c conda-forge keras

conda install -c conda-forge keras-gpu

conda install -c conda-forge tensorflow

conda install -c anaconda tensorflow-gpu

2) Set keras to use tensorflow backend, in case it uses theano (default)

go to Anaconda3/envs/<env name>/etc/activate.d/keras_activate.bat

go to <User home directory>\.keras\keras.json and change to tensorflow

3) run jupyter:

jupyter notebook

Pre requisites :

1- Anaconda installed

2- setup Nividia CUDA 9.0 https://developer.nvidia.com/cuda-90-download-archive

3- setup Nividia cuDNN 7 (for CUDA 9.0) https://developer.nvidia.com/rdp/cudnn-download

https://docs.nvidia.com/deeplearning/sdk/cudnn-install/index.html#install-windows

Steps:

0) run Anaconda Prompt

1) install conda packages

conda create --name tf-gpu-keras-p35 python=3.5 pip

activate tf-gpu-keras-p35

conda install jupyter

conda install scipy

conda install scikit-learn

conda install pandas

pip install tensorflow-gpu

pip install keras

The following didn't work for me:

(didn't work with keras, keras installed tensorflow 1.1.0) keras keeps overriding the tensorflow-gpu

you get empty tensorflow module

conda install -c conda-forge keras

conda install -c conda-forge keras-gpu

conda install -c conda-forge tensorflow

conda install -c anaconda tensorflow-gpu

2) Set keras to use tensorflow backend, in case it uses theano (default)

go to Anaconda3/envs/<env name>/etc/activate.d/keras_activate.bat

go to <User home directory>\.keras\keras.json and change to tensorflow

3) run jupyter:

jupyter notebook

Wednesday, August 1, 2018

Hibernate Objects,Sessions across threads - Illegal attempt to associate a collection with two open sessions

In the land of Hibernate a concept of Session is important.

it plays the role of tracking changes that happen to (hibernate objects) from the moment you open it.

and you can't actually do much without it, it's your entry to hibernate capabilities

so if you want to load an object you have to obtain a session instance first then load the object:

Session s = sessionFactory.getCurrentSession();

MyObject mo = s.byId(MyObject.class).load(123);

now that object (mo) is associated with that session

if you do something like

mo.setName('new Name');

the session will track that this object is dirty. and it does much more that you can read about.

What I want to get to is that in case you have to do some background work with your object you have to be careful that:

1- the session is not thread safe (i.e. it can't be passed between threads and assume no concurrency issues will happen)

2- the objects associated with one session cannot be associated with another open session

so if you try to do something like this:

// this is psudo code, just to get the point:\

MyObject mo = dao.load(123);

new Thread( () -> {

mo.setName('abc');

dao.update(mo);

}).run();

// Data access methods (usually in a dao)

MyObject load(int id) {

return sessionFactory.getCurrentSession().byId(MyObject.class).load(123);

}

void update(MyObject m) {

Session s = sessionFactory.getCurrentSession();

s.save(m);

}

that won't work, and hibernate will complain :

//do stuff with mo

new Thread( () -> {

// transaction B

// reload the hibernate object and don't use the one from other thread

MyObject mo2 = dao.load(123); <-- this will be associated with session 2

mo2.setName('abc');

dao.update(mo2);

}).run();

another solutions involve using evict(); and merge();

Cheers !

read more:

1- https://developer.jboss.org/wiki/Sessionsandtransactions?_sscc=t

2-https://stackoverflow.com/questions/11673303/multithread-hibernateexception-illegal-attempt-to-associate-a-collection-with

3- https://stackoverflow.com/questions/11237924/hibernate-multi-thread-multi-session-and-objects

it plays the role of tracking changes that happen to (hibernate objects) from the moment you open it.

and you can't actually do much without it, it's your entry to hibernate capabilities

so if you want to load an object you have to obtain a session instance first then load the object:

Session s = sessionFactory.getCurrentSession();

MyObject mo = s.byId(MyObject.class).load(123);

now that object (mo) is associated with that session

if you do something like

mo.setName('new Name');

the session will track that this object is dirty. and it does much more that you can read about.

What I want to get to is that in case you have to do some background work with your object you have to be careful that:

1- the session is not thread safe (i.e. it can't be passed between threads and assume no concurrency issues will happen)

2- the objects associated with one session cannot be associated with another open session

so if you try to do something like this:

// this is psudo code, just to get the point:\

MyObject mo = dao.load(123);

new Thread( () -> {

mo.setName('abc');

dao.update(mo);

}).run();

// Data access methods (usually in a dao)

MyObject load(int id) {

return sessionFactory.getCurrentSession().byId(MyObject.class).load(123);

}

void update(MyObject m) {

Session s = sessionFactory.getCurrentSession();

s.save(m);

}

that won't work, and hibernate will complain :

Illegal attempt to associate a collection with two open sessions

because we passed the object between two threads while the first session could still be open when the second other thread starts.

and what makes it worse is that you probably won't see the exception message because the other thread won't stop your main thread execution and you will never know why the update failed.

so as a good practice is to have good error handling in background threads.

I had to learn this the hard way after debugging something similar where my update had to be done in background and spent few hours debugging a ghost issue without clue until I placed some std out /err good old print statements because debugging multiple threads can be hard when there is alot of moving parts.

Solution:

1- don't pass hibernate object

2- pass the id of the object instead / or anything to reload it fast in the other thread, that way it will not be considered the same object.

// transaction A

MyObject mo = dao.load(123); <-- will work in session 1//do stuff with mo

new Thread( () -> {

// transaction B

// reload the hibernate object and don't use the one from other thread

MyObject mo2 = dao.load(123); <-- this will be associated with session 2

mo2.setName('abc');

dao.update(mo2);

}).run();

another solutions involve using evict(); and merge();

Cheers !

read more:

1- https://developer.jboss.org/wiki/Sessionsandtransactions?_sscc=t

2-https://stackoverflow.com/questions/11673303/multithread-hibernateexception-illegal-attempt-to-associate-a-collection-with

3- https://stackoverflow.com/questions/11237924/hibernate-multi-thread-multi-session-and-objects

Subscribe to:

Posts (Atom)

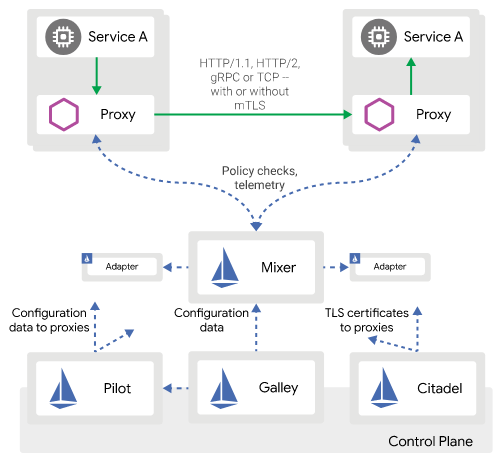

Istio —simple fast way to start

istio archeticture (source istio.io) I would like to share with you a sample repo to start and help you continue your jou...

-

So RecyclerView was introduced to replace List view and it's optimized to reuse existing views and so it's faster and more efficient...

-

In the previous part we added the first call to the async api to do search, now we will build on that to call more apis, and to add more c...

-

In this post I'll explain the required work to create a rest API utilizing both spring and hibernate version 4, and the configuration wi...